6.3(2): Population mean (Statistical hypothesis testing )

Problem 6.9 [Statistical hypothesis testing]

Consider the simultaneous normal measurement ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu, \sigma)]})$.n Assume the null hypothesis $H_N$ such that

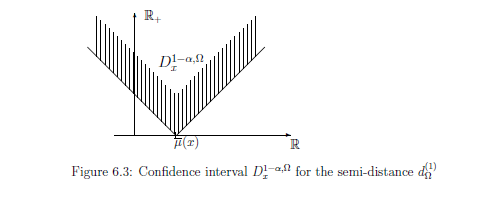

For any $ \omega=(\mu, \sigma ) (\in \Omega= {\mathbb R} \times {\mathbb R}_+ )$, define the positive number $\eta^\alpha_{\omega}$ $(> 0)$ such that:

where ${{ Ball}^C_{d_{\Theta}^{(1)}}}(\pi( \omega ) ; \eta)$ $=$ $\{ \theta (\in\Theta): d_{\Theta}^{(1)} (\mu, \theta ) \ge \eta \}$ $= \Big( ( -\infty, \mu - \eta] \cup [\mu + \eta , \infty ) \Big) $

Hence we see that

Therefore, we get ${\widehat R}_{H_N}^{\alpha}$ ( the $(\alpha)$-rejection region of $H_N (= \{ \mu_0\} \subseteq \Theta (= {\mathbb R}))$ ) as follows:

Contents

Then, find the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}( \subseteq \Theta)$ (which may depend on $\sigma$) such that

Here, the more the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}$ is large, the more it is desirable.

$\bullet:$ the probability that a measured value $x (\in {\mathbb R}^n )$ obtained by ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu_0, \sigma)]})$ satisfies that

\begin{align}

E(x) \in {\widehat R}_{{H_N}}^{\alpha; \Theta}

\end{align}

is less than $\alpha$.

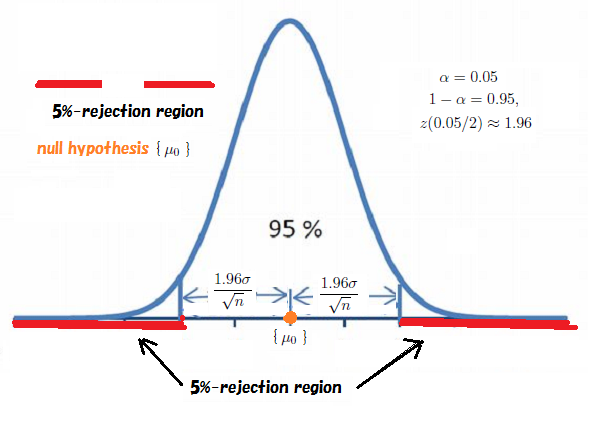

Note that the ${\widehat R}_{\{\mu_0\}}^{\alpha, \Theta}$ ( the $(\alpha)$-rejection region of $\{ \mu_0\}$ ) depends on $\sigma$. Thus, putting

\begin{align} & {\widehat R}_{\{ \mu_0 \} \times {\mathbb R}_+}^{\alpha} = \{ (\overline{\mu}(x), \sigma) \in {\mathbb R} \times {\mathbb R}_+ \;:\; | \mu_0 - \overline{\mu}(x)| = | \mu_0 - \frac{x_1+ \ldots + x_n}{n}| \ge \frac{\sigma}{\sqrt{n}} z(\frac{\alpha}{2}) \} \\ & \tag{6.36} \end{align} we see that ${\widehat R}_{ \{\mu_0\}\times {\mathbb R}_+}^{\alpha}$="the slash part in Figure 6.4".

Our present problem was as follows

Then, find the rejection region ${\widehat R}_{{H_N}}^{\alpha; \Theta}( \subseteq \Theta)$ (which may depend on $\sigma$) such that

| $\bullet$ | the probability that a measured value $x (\in {\mathbb R}^n )$ obtained by ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_G^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu_0, \sigma)]})$ satisfies that \begin{align} E(x) \in {\widehat R}_{{H_N}}^{\alpha; \Theta} \end{align} is less than $\alpha$. |

[Rejection region of $H_N=( -\infty , \mu_0] \subseteq \Theta (={\mathbb R})$]. Consider the simultaneous measurement ${\mathsf M}_{L^\infty ({\mathbb R} \times {\mathbb R}_+)}$ $({\mathsf O}_N^n = ({\mathbb R}^n, {\mathcal B}_{\mathbb R}^n, {{{G}}^n}) ,$ $S_{[(\mu, \sigma)]})$ in $L^\infty ({\mathbb R} \times {\mathbb R}_+)$. Thus, we consider that $\Omega = {\mathbb R} \times {\mathbb R}$, $X={\mathbb R}^n$. Assume that the real $\sigma$ in a state $\omega = (\mu, \sigma ) \in \Omega $ is fixed and known. Put

\begin{align} \Theta = {\mathbb R} \end{align}The formula (6.3) urges us to define the estimator $E: {\mathbb R}^n \to \Theta (\equiv {\mathbb R} )$ such that

\begin{align} E(x)= = \overline{\mu}(x) = \frac{x_1 + x_2 + \cdots + x_n}{n} \tag{6.37} \end{align} And consider the quantity $\pi: \Omega \to \Theta $ such that \begin{align} \Omega={\mathbb R} \times {\mathbb R}_+ \ni \omega = (\mu, \sigma ) \mapsto \pi (\omega ) = \mu \in \Theta={\mathbb R} \end{align}Consider the following semi-distance $d_{\Theta}^{(2)}$ in $\Theta (={\mathbb R} )$:

\begin{align} d_{\Theta}^{(2)}((\theta_1, \theta_2) = \left\{\begin{array}{ll} |\theta_1 - \theta_2| \quad & \theta_0 \le \theta_1, \theta_2 \\ |\theta_2 - \theta_0| \quad & \theta_1 \le \theta_0 \le \theta_2 \\ |\theta_1 - \theta_0| \quad & \theta_2 \le \theta_0 \le \theta_1 \\ 0 \quad & \theta_1 , \theta_2 \le \theta_0 \end{array}\right. \tag{6.38} \end{align}Define the null hypothesis $H_N$ such that

\begin{align} H_N= ( -\infty , \mu_0] (\subseteq \Theta (= {\mathbb R})) \end{align}For any $ \omega=(\mu, \sigma ) (\in \Omega= {\mathbb R} \times {\mathbb R}_+ )$, define the positive number $\eta^\alpha_{\omega}$ $(> 0)$ such that:

\begin{align} \eta^\alpha_{\omega} = \inf \{ \eta > 0: [F (E^{-1} ( { { Ball}^C_{d_{\Theta}^{(2)}}}(\pi(\omega) ; \eta))](\omega ) \le \alpha \} \nonumber \end{align}where ${{ Ball}^C_{d_{\Theta}^{(2)}}}(\pi( \omega ) ; \eta)$ $=$ $\{ \theta (\in\Theta): d_{\Theta}^{(2)} (\mu, \theta ) \ge \eta \}$ $= \Big( ( -\infty, \mu - \eta] \cup [\mu + \eta , \infty ) \Big) $

Hence we see that

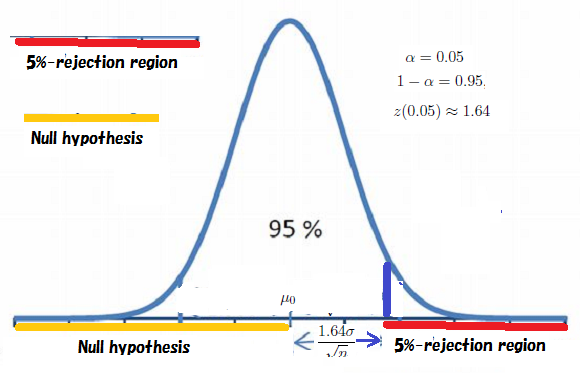

\begin{align} & E^{-1}({{ Ball}^C_{d_{\Theta}^{(2)}}}(\pi (\omega) ; \eta )) = E^{-1} \Big( [\mu + \eta , \infty ) \Big) \nonumber \\ = & \{ (x_1, \ldots , x_n ) \in {\mathbb R}^n \;: \; \mu + \eta \le \frac{x_1+\ldots + x_n }{n} \} \nonumber \\ = & \{ (x_1, \ldots , x_n ) \in {\mathbb R}^n \;: \; \frac{(x_1- \mu)+\ldots + (x_n- \mu) }{n} \ge \eta \} \tag{6.39} \end{align} Thus, \begin{align} & [{{{G}}}^n (E^{-1}({{ Ball}^C_{d_{\Theta}^{(2)}}}(\pi(\omega) ; \eta ))] (\omega) \nonumber \\ = & \frac{1}{({{\sqrt{2 \pi }\sigma{}}})^n} \underset{{ \frac{(x_1- \mu)+\ldots + (x_n- \mu) }{n} \ge \eta }}{\int \cdots \int} \exp[{}- \frac{\sum_{k=1}^n ({}{x_k} - {}{\mu} )^2 } {2 \sigma^2} {}] d {}{x_1} d {}{x_2}\cdots dx_n \nonumber \\ = & \frac{1}{({{\sqrt{2 \pi }\sigma{}}})^n} \underset{{ \frac{x_1+\ldots + x_n }{n} \ge \eta }}{\int \cdots \int} \exp[{}- \frac{\sum_{k=1}^n ({}{x_k} {}{} )^2 } {2 \sigma^2} {}] d {}{x_1} d {}{x_2}\cdots dx_n \nonumber \\ = & \frac{\sqrt{n}}{{\sqrt{2 \pi }\sigma{}}} \int_{{|x| \ge \eta}} \exp[{}- \frac{{n}{x}^2 }{2 \sigma^2}] d {x} = \frac{1}{{\sqrt{2 \pi }{}}} \int_{{|x| \ge \sqrt{n} \eta/\sigma}} \exp[{}- \frac{{x}^2 }{2 }] d {x} \tag{6.40} \end{align} Solving the following equation: \begin{align} \frac{1}{{\sqrt{2 \pi }{}}} \int^{-z(\alpha/2)}_{-\infty} \exp[{}- \frac{{x}^2 }{2 }] d {x} = \frac{1}{{\sqrt{2 \pi }{}}} \int_{z(\alpha/2)}^{\infty} \exp[{}- \frac{{x}^2 }{2 }] d {x} = {\alpha} \tag{6.41} \end{align} we define that \begin{align} \eta^\alpha_{\omega} = \frac{\sigma}{\sqrt{n}} z({\alpha}) \tag{6.42} \end{align}Then, we get ${\widehat R}_{H_N}^{\alpha, \Theta}$ ( the $(\alpha)$-rejection region of $H_N (= ( - \infty , \mu_0] \subseteq \Theta (= {\mathbb R}))$ ) as follows:

\begin{align} {\widehat R}_{ ( - \infty , \mu_0]}^{\alpha, \Theta} & = \bigcap_{\pi(\omega ) = \mu \in ( - \infty , \mu_0] } \{ {E(x)} (\in \Theta= {\mathbb R}) : d_{\Theta}^{(2)} (E(x), \pi (\omega)) \ge \eta^\alpha_{\omega } \} \nonumber \\ & = \{ E(x) (= \frac{x_1+ \ldots + x_n}{n}) \in {\mathbb R} \;:\; \frac{x_1+ \ldots + x_n}{n} - \mu_0 \ge \frac{\sigma}{\sqrt{n}} z({\alpha}) \} \tag{6.43} \end{align}

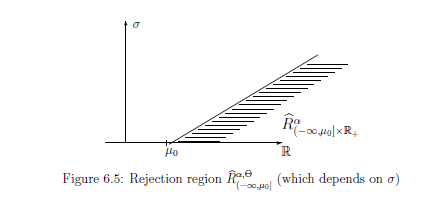

Thus, in a similar way of Remark 6.10, we see that ${\widehat R}_{ (- \infty, \mu_0 ] \times {\mathbb R}_+}^{\alpha}$="the slash part in Figure 6.5", where

\begin{align} & {\widehat R}_{( - \infty, \mu_0 ] \times {\mathbb R}_+}^{\alpha} = \{ (E(x) (= \frac{x_1+ \ldots + x_n}{n}), \sigma) \in {\mathbb R} \times {\mathbb R}_+ \;:\; \frac{x_1+ \ldots + x_n}{n} - \mu_0 \ge \frac{\sigma}{\sqrt{n}} z({\alpha}) \} \\ & \tag{6.44} \end{align}